🌍 Your 2026 prediction bets are in

We asked, you answered, and experts weighed in on 2026

Exclusive data and insights on the power behind the data center buildout

Everyone knows data centers are bringing new demands for power. You’ve likely seen countless variations of the “up and to the right” chart (we know we have). The IEA projects global data center electricity consumption will increase by ~15% between 2024 and 2030; McKinsey forecasts a 3.5x increase in data center capacity demand between 2025 and 2030. It’s the kind of hockey stick that gets baked into every investor deck, utility IRP, and AI hype cycle thinkpiece.

Underneath the curve, what’s far less clear is how these centers will actually get powered. On-the-ground constraints are stacking up fast: multi-year backlogs for gas turbines, interconnection queues and policy headwinds for wind and solar, long deployment timelines for nuclear and geothermal. And all this is playing out against a backdrop of rising electricity prices in key markets.

So, with all these top-down projections and trends swirling around, in classic CTVC/Sightline fashion, we decided to go bottom up to figure out what’s really happening on the ground. We built the dataset we wished existed, tracking actual data center developments, their proposed powering models, and the grid plans (or lack thereof) to bring them online. Not the vibes, but the real demand signals that utilities, developers, and tech companies are trying to plan around. Then we wrote the report we wanted to read, our new Data Center Powering Models report.

This is a sneak preview of the full version, available for Sightline clients here, aiming to answer the tactical questions:

We used this report to launch full coverage of Data Centers, where Sightline clients enjoy full access to our datasets on data center project construction, supply contracts, and cost benchmarks. This also includes access to our charting & analytics tools, Signals AI newsfeed, and ongoing expert analysis.

Read on for highlights from the report, and download the public version of the report here.

Before we get into the data, let’s level-set.

At their core, data centers are the digital engines of modern life — racks of servers, networking gear, and storage systems powering everything from your Netflix queue to ChatGPT training runs to the inbox you’re reading this in. Now, the AI boom is pushing the engine into overdrive. The logic is simple: more compute → better models → more market share → a bigger slice of the AI pie. But in effect, this has resulted in a global arms race to build ever-larger, ever-hungrier data centers. It’s become one of the most complex, capital-intensive real estate and infrastructure bets today.

Not all data centers are created equal. They range from more familiar enterprise and cloud sites to edge, co-location, hyperscaler, and purpose-built AI factories, but it’s the last three driving most of the power demand. Historically, latency and fiber connectivity were the deciding factors in siting decisions. Now, it’s all about power availability, as access to megawatts — and even gigawatts — becomes the ultimate gating factor. Land, fiber, and water for cooling all still matter, but they’ve been knocked down the priority list, secondary to securing a firm, scalable, and ideally clean, power supply.

Typical facilities draw 10–100MW, but next-gen hyperscalers can exceed 300MW, with the largest announced campuses aiming for 3GW — or about 35% more than the combined capacity of the Vogtle Units 3&4 nuclear facilities that went online in 2023 and 2024.

That adds up. In 2022, data centers consumed roughly 460TWh of electricity, or 2% of global usage. But in the next five years out to 2030, we’ve seen estimates that this demand should climb anywhere from 50% to over 150%.

So with all this demand, whatever the number ends up being, it begs the multi-billion-dollar question: how do you get data centers the massive, round-the-clock electrons they need — where they need it, when they need it — in the cleanest, most efficient way? And that’s really the purpose of the Data Center Powering Models report, and our ongoing coverage — to spell-out the strategies for powering data centers in the short and long-term.

Ok, with that context, let’s dive in. First the strategies, then the numbers.

In the race to get power fast, even as tech companies tout climate commitments, the short-term strategy is gimme, gimme, gimme. Data center owners need as much power as they can get, and it needs to be 24/7 reliable. At the same time, interconnection requests for new data centers are jamming transmission queues, delaying not just hookups but also other projects in line. Utilities are put in a position of scrambling to build new substations and transmission lines, with costs often passed to all ratepayers (i.e., not just the data center owners). And because hyperscalers want to create compute clusters near capacity they’ve built before, they’re increasingly sited in population centers with aging, constrained grids.

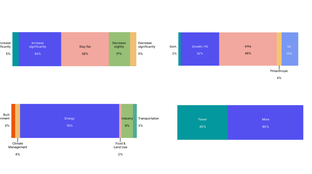

So how can they do it? Powering models can be on or off-grid, and use clean or fossil power, but each option comes with trade-offs in speed, cost, scalability, and emissions. As we’re showing below, using excess existing generation is one of the fastest ways to get power, but it’s restrictive. Data centers need to be scaled to meet the power, not the other way around. Backup power can act as a ‘bridging’ solution, but it’s expensive. Renewable microgrids seem great, but they’re tough to balance if totally disconnected from the grid. At the other end of the spectrum, we’ve seen some hyperscalers investing in SMRs, in the hopes that one day powering a data center will be as simple as building a tiny nuclear power plant next to it. Easy.

So how does all this get paid for? In the US, data centers often fall under “large load” schemes, where deals lock in multi-year capacity and can bundle in new power if operators help fund grid upgrades, attempting to shield other ratepayers from higher bills. These agreements often include long-term contracted capacity (anywhere from 2–20 years), exit fees for early termination, demand response obligations, and minimum load requirements, plus premiums to cover novel clean power or new infrastructure.

The biggest developers are often willing to just build or fund their own power. That’s where clean power tariffs come in, like Nevada’s Clean Transition Tariff, North Carolina’s Green Source Advantage Choice, and Duke Energy’s proposed Accelerating Clean Energy (ACE) tariff. They give hyperscalers a pathway to secure clean firm power, absorb grid costs, and set up a repeatable model, while also de-risking utility investments and new generation tech.

In parts of Europe, abundant hydropower and nuclear flip the equation, with discounted rates, tax breaks, or fast-track connections. But stressed grids like Dublin’s are starting to require on-site generation and storage before granting a hookup.

Most of the options for powering data centers quickly involve tapping into existing, excess capacity, or using a significant amount of backup generation. In order for sites to come online quickly, backup power must use a fuel that is separate from the grid. In other words, small turbines running on natural gas or RNG are needed, as batteries or LDES would still need a large grid connection to charge.

That’s why today’s builds tend to look the same: fast, firm, and often gassy. Gas is the default and backup power buys time, while some developers can repurpose coal plant connections or plug into regions with excess nuclear or renewables.

Thereʼs much more variation in the long-term options. Most of these are at least partly additive, and almost all are clean. But they vary from 2-year timeframes (gas without CCS) to potentially over 10 years (advanced nuclear). Many of them donʼt have firm cost ranges, so could end up being very expensive and still face permitting unknowns.

We've got deeper dives into each of these powering models, plus examples of the players pursuing them, in the report. Get it here.

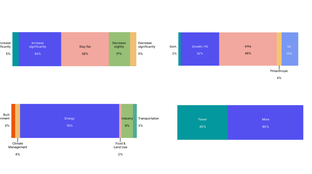

In our dataset, we’ve tracked 294 data center projects weighing-in at 73.6GW demand. The pipeline is constantly evolving with new projects announced basically every day — we’re tracking those too 🤓. Over 8.9GW across 105 projects in the pipeline are targeting operation by end-2026, with 47 already under construction.

Since data centers themselves only take 12-18 months to build, power availability will be the key factor in determining whether the projects come online on time. More announcements are expected, and no widespread cancellations have come yet, reflecting strong financial and policy backing.

Meanwhile, geographic diversification is accelerating, with more activity in Asia and Europe — particularly as US grids get congested. The US leads with 106 announced sites, while mega-projects in the UK and France signal a wave of strategic AI infrastructure in Europe and Asia. Plus, 15 announced projects globally exceed 1GW capacity as AI drives demand for hyperscale buildouts, aka facilities designed for cloud, big data, and AI workloads. Next-gen centers optimized for AI training and inference are central to this wave. In fact, 5 projects top 3GW, with hundreds of billions in investment at stake.

Big Tech is unsurprisingly leading the charge here: We tracked over 20 announced projects from Microsoft, with Google and Amazon close behind, then Meta and Oracle. But governments are also increasingly getting into the game. The US DOE has 16 projects, while the EUʼs EuroHPC JU has 11 – funding strategic projects to increase their nations' compute capacity and ensure they maintain an edge in new tech.

Building data centers has always been capital-intensive, but costs are growing more complex. Tracked investments for data centers are not quite linear and are still hunting for predictability. With the median at $800m, or about $5.5m/MW installed, costs vary based on:

You can see in the chart below the investment vs. capacity of the majority of announced data centers, but significant outliers exist depending on these factors. Download the report for a more in-depth exploration of these costs.

Looking forward, while the upfront investment to build these centers will continue to rise, the critical question will be how efficiently they can be powered, and whether the technology to decarbonize the grid at scale can meet the rapidly expanding demand.

We asked, you answered, and experts weighed in on 2026

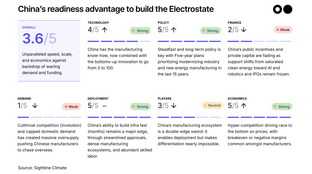

My visit to China's cleantech factories, labs, and HQs

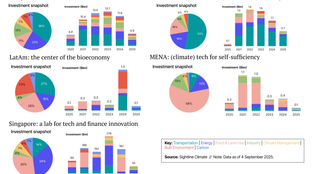

Get the data, insights, and case studies behind the next wave of climate tech